01: Background

Embodied Labs is an immersive training platform that uses virtual reality to transform the way caregivers, healthcare professionals, and educators understand and empathize with people experiencing health conditions such as dementia, vision impairment, and hearing loss. By placing users in the first-person perspective of affected individuals, this platform can foster a deeper understanding of the challenges they face, improving the quality of care and support they receive.

My role: building a 0-to-1 app designed to be a one-stop shop.

We needed a centralized platform where users could seamlessly set up VR sessions, troubleshoot issues, and monitor multiple users' experiences in real-time. Before this app, users had to rely on trial and error—or calls to customer support—to navigate the system. We were also switching from a complicated tethered VR system (with lots of cable wrangling) to the wireless Quest 3, so naturally, features (like VR mirroring to a screen) were more difficult to re-implement. Our goal was to simplify this new process of running sessions, as well as reducing frustrations and improving user experience from the old workflow.

02: Human Experience Exploration

Many of the company's past design decisions were reactive, driven by customer support interactions when users encountered issues or when it came time to renew. I saw an opportunity to take a more proactive, data-driven approach by directly engaging with users. I was more than happy to have spearheaded our company's first set of user research, in the form of observational studies, first-person interviews, and surveys.

User Research

Our research began with observational studies, followed by open-ended interviews. We visited both established and prospective users at Kern County Aging & Adult Services and the Colorado Department of Human Services. We asked users to "think aloud" as they navigated their sessions—from setup (turning on VR headsets and computers) to hardware breakdown. This allowed us to better understand their thought processes, pain points, and overall experience.

We measured both qualitative and quantitative data including:

Observational Study Metrics 📈

- task time

- task success

- abandonment rate of interactions

- number of attempts per task

Interview & "Think Aloud" Insights 🧠

- frustration triggers

- decision-making difficulties

- satisfaction scores

- confidence levels

We organized user quotes from "think-aloud" sessions and post-session interviews (shown in yellow) into one of four key categories (shown in purple). The selected quotes below represent a sample of the overall insights.

Insights

Based on our primary user research, the design team identified the 3 following key insights:

03: Design Process & Ideation

While our previous system was functional and engaging, it lacked the reliability and usability our users needed. My role was to translate these pain points into intuitive, scalable design solutions, creating an app with the goal of including the features shown below. With insights in hand, I began a design process focused on iteration, collaboration, and rapid feedback.

Wireframes

04: Designs & Revisions

Final Designs

This UI matches the branding of the Embodied Labs website with consistent colors, fonts, and layout. I focused on making it simple and intuitive for facilitators who might not be very tech-savvy. The left-hand dashboard gives them a clear path to follow as they set up a session, starting with choosing a lab and managing users. I also added a guided setup button that walks them through the full process from turning on VR headsets to what to say when the session begins. It’s been really helpful for building user confidence.

Revisions

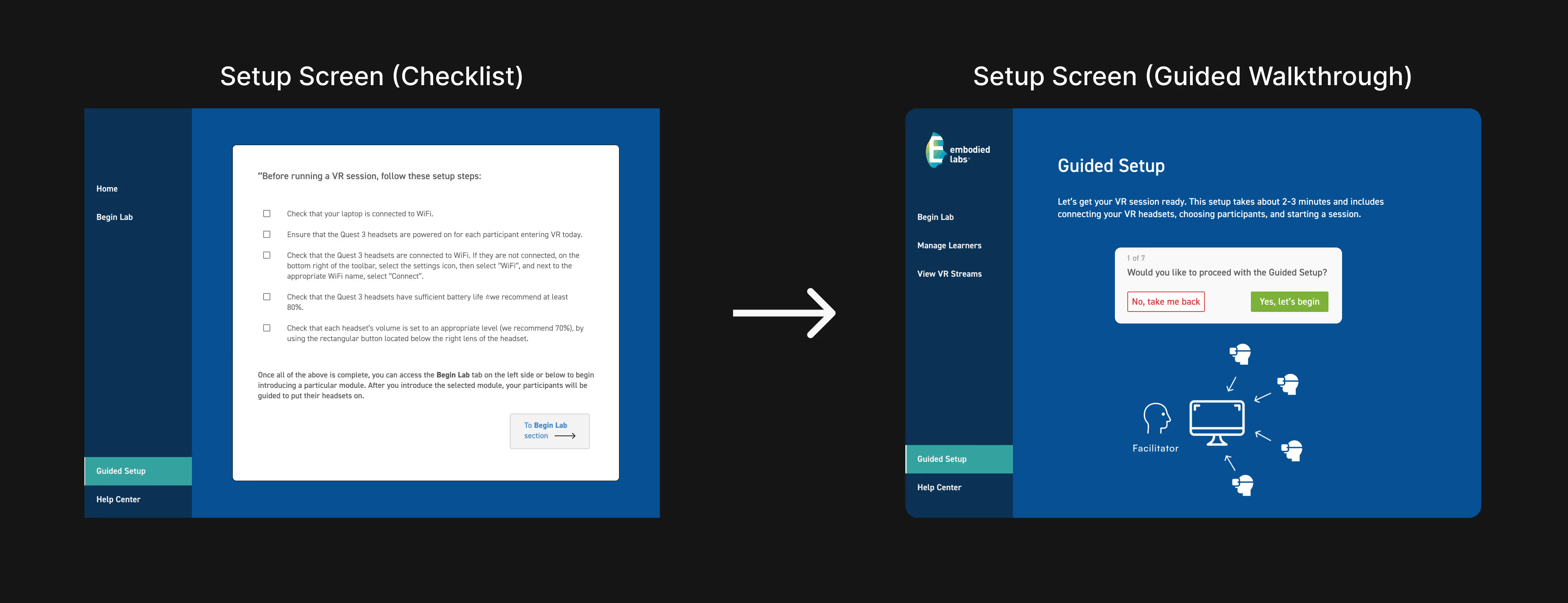

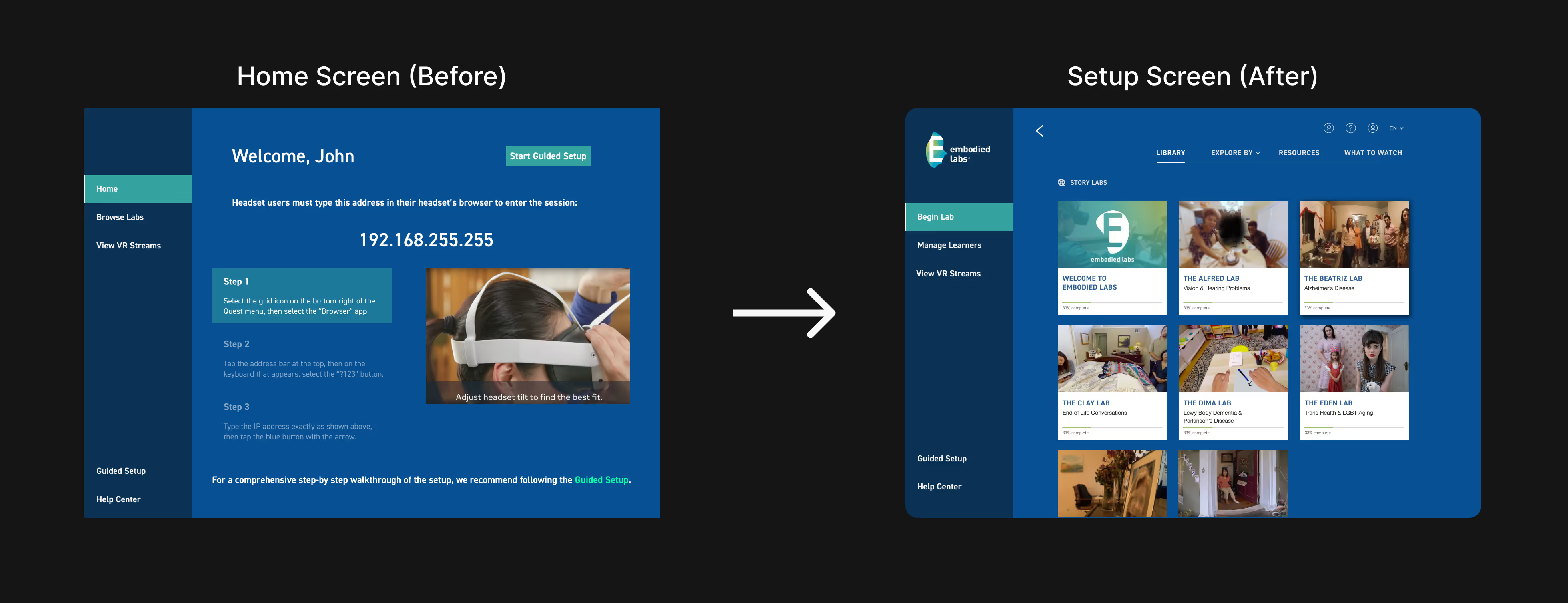

No good design process is ever linear! Iteration is where the real progress happens. In this case, the first major revision came when we shifted from using a manual IP address entry to connect VR headsets to our app. Originally, facilitators had to type in a local IP to sync headsets with a laptop, but we later built out a local network discovery feature that let the system automatically detect nearby devices, making setup faster and less error-prone. The second revision was a transition from a static checklist to an interactive guided setup. The checklist was a temporary solution for early users who needed a quick reference before the full onboarding experience was ready. It helped pave the way for a smoother, more supportive walkthrough later on.

We moved from a manual IP entry screen with step-by-step instructions and videos to a clean, centralized home screen once we found a reliable workaround. This simplified setup and gave facilitators faster access to core features - they can get right into the meat and potatoes right away.